Algorithmic biases. Beyond age: Nationality and Race in Action

Biases are everywhere, mostly because we are a prejudiced society even when we don't realize it. Here we see just two examples, there are more...

Made with Nano Banana

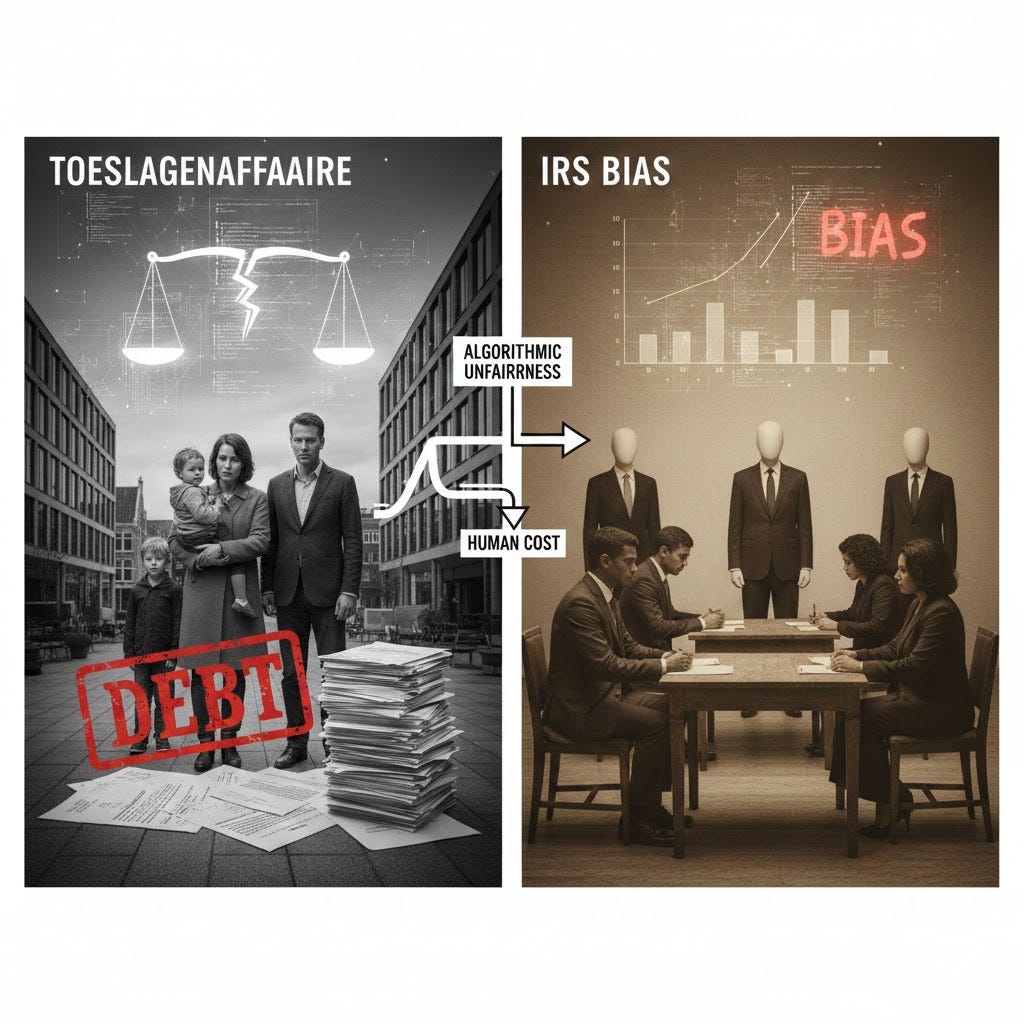

Algorithmic biases go beyond obvious demographic metrics like age (as seen in previous posts, and as a Gen X-er, I tend to write about that bias), rooting themselves in the very architecture of Machine Learning (ML) systems and rule-based systems. These biases emerge from three main sources: institutional prejudices reflected in business rule design, inherently biased training data, and the amplification of spurious correlations. When implemented without fairness audits, these systems (which seem neutral) perpetuate and scale historical inequalities, using inadvertent proxy variables (like nationality, zip code, or family relationships) that correlate with racial or national origin groups, as evidenced in the Dutch Toeslagenaffaire and IRS audits in the US.

Toeslagenaffaire: (Netherlands)

In 2019, a Dutch-Moroccan family with dual nationality went to public television after being labeled fraudulent and forced to repay €50,000 in childcare subsidies, alerting thousands of similar cases. Journalistic and parliamentary investigations revealed that the Belastingdienst’s (tax authority) machine learning algorithm used non-Dutch nationality as a fraud risk proxy, trained on biased prior audit data that over-scrutinized migrants. In 2021, the Data Protection Authority (AP) confirmed automatic blacklists and ethnic origin filters, leading to the government’s resignation; the Donner committee detailed the vicious sampling bias cycle without human intervention.

IRS: Racial Bias Revelation (US)

In 2021, Stanford researchers analyzed 9 years of IRS audit data and noted 3-5 times higher rates for African American taxpayers on EITC credits, despite not using direct racial data. The Dependent Database (DDB), with expert rules, and the Systems Research and Application (SRA) machine learning model prioritized cases by risk scores based on biased data like multiple residences and family relationships, inadvertent race proxies. The IRS confirmed the findings in 2023; a 2024 GAO report criticized the lack of bias testing and periodic reviews, recommending algorithmic transparency.

How Biases Were Encoded: Algorithmic Mechanisms

Both cases illustrate how automated decision systems translated systemic prejudices into high-impact automated discrimination, using efficiency as an excuse to bypass equity.

Toeslagenaffaire Algorithm: Sampling Bias and Proxy

The Belastingdienst system employed a machine learning classifier designed to predict fraud risk.

Sampling Bias: The model was trained on historical audit data that already over-examined migrant families, making the initial dataset inherently biased.

Explicit Proxy Use: The classifier identified non-Dutch nationality as a strong fraud predictor, using it as a direct proxy in decisions.

Feedback Loop: By using this variable, the model recommended more audits on migrants, generating new biased data that fed back into the model, creating a vicious cycle of over-auditing that entrenched the initial bias. Additionally, automatic “blacklists” maximized harm by eliminating human oversight.

Moreover, an “all or nothing” policy forced parents to repay all received aid (sometimes exorbitant sums) for minor administrative errors, leading many families to bankruptcy and extreme financial hardship.

IRS Algorithms: Bias from Inadvertent Correlation

The IRS uses the rule-based Dependent Database (DDB) and the Systems Research and Application (SRA) model, which employs machine learning to assign a risk score.

Inadvertent Proxy Bias: The SRA did not use direct racial data. However, it prioritized variables like zip code or family relationship structures.

Socioeconomic Correlation: These variables are strongly correlated with race due to systemic US inequalities. Thus, the algorithm inadvertently penalized African American taxpayers.

Lack of Disaggregation: The key failure was the absence of equity testing in SRA model metrics. By not disaggregating audit outcomes by racial group, the IRS failed to detect disparities in audit rates, allowing the model to amplify hidden systemic prejudice.

Case Conclusions: Shared Lessons

The Toeslagenaffaire in the Netherlands and IRS audit disparities in the United States had profound consequences for public administration, administrative law, and AI regulation. Both events exposed the risks of automation without adequate oversight and algorithmic discrimination.

Both cases demonstrate that algorithms without rigorous audits perpetuate discrimination by encoding historical prejudices into data and rules, devastating vulnerable communities by nationality or race.

The Dutch case exemplifies sampling bias amplification, where the ML classifier used a direct proxy (nationality) already contaminated by historical prejudices, creating a vicious cycle that culminated in the government’s fall, multimillion compensations, and highlighting the need for mandatory “algorithmic registers” for transparency.

In contrast, the IRS case shows bias from inadvertent correlation, where ostensibly neutral variables become race proxies due to deep socioeconomic inequality, leading African American taxpayers to be audited up to five times more. This confirms that the absence of bias testing and periodic updates exacerbates systemic inequalities.

This was not due to African American taxpayers committing more intentional fraud, but because the algorithm was optimized to detect errors in refundable credits (like the Earned Income Tax Credit or EITC), frequently claimed by low-income and minority populations, rather than focusing on high-income complex tax evasion.

Both underscore ethical imperatives: the need to train with de-biased data, demand human explainability, and continuously review to prevent public AI from becoming a tool of injustice rather than efficiency.

The key takeaway is that no one wants to discriminate (in principle), but the lack of human oversight, combined with historical prejudices at various stages of algorithm construction, can lead to it.

What an article, Marcela. Although, to be honest I can't say that I'm entirely surprised by your findings. This is exactly why we have to be extra thoughtful and careful with the information we train these algorithms and Large Launge Models with. They are only as fair as the information we program them with.

This is a powerful reminder that biased systems usually come from biased histories.

What stands out most is how quietly proxy data can turn into real harm when no one checks it.

It’s why transparency and constant oversight aren’t optional, they’re the bare minimum.